371: Provide a sort error handler r=Kerollmops a=irevoire

This PR simplify the error handling of asc-desc rules for Meilisearch or any other wrapper by providing directly in milli a new error type called `SortError` that can be generated from an `AscDescError` and that can be automatically converted to a `UserError`.

Basically now, wherever you are in the code as a user or in milli you can parse an `AscDesc` syntax and depending on the context, cast it either as a `SortError` or a `CriterionError` in one line with improved error messages.

Co-authored-by: Tamo <tamo@meilisearch.com>

1703: Trigger CodeCoverage manually instead of on each PR r=irevoire a=curquiza

Since no one is using it now on the PRs, we would rather get a state of the code coverage once (triggered manually) rather than on each PR.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1724: Redo CONTRIBUTING.md r=curquiza a=curquiza

- Update `Development` section

- Update the `Git Guidelines` section

- Remove `Benchmarking & Profiling` -> done on the milli side at the moment

- Remove `Humans` -> synchronization job done by the manager of the core team at the moment

- Remove `Changelog` section -> done by the manager and the docs team

- Remove `Documentation` section -> job done by the manager to synchronize both teams.

Fixes#1723 at the same time

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

370: Change chunk size to 4MiB to fit more the end user usage r=ManyTheFish a=ManyTheFish

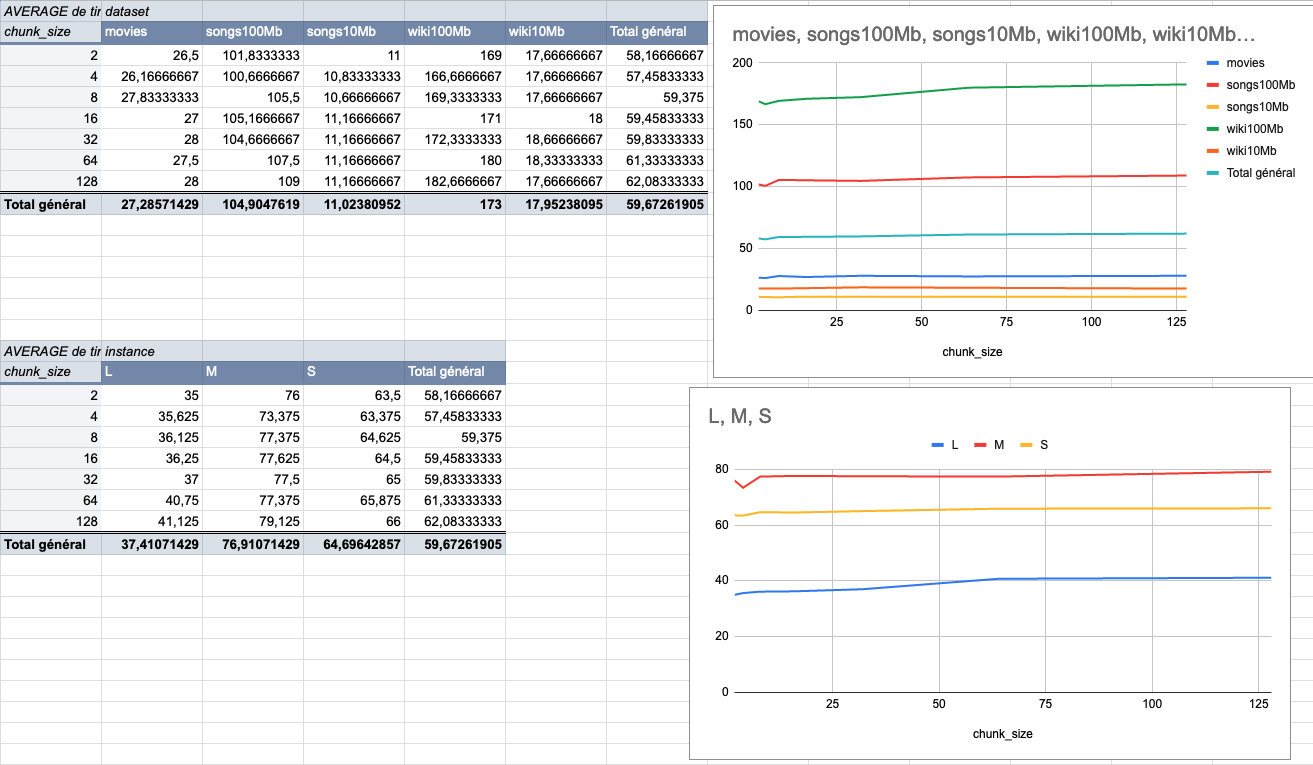

We made several indexing tests using different sizes of datasets (5 datasets from 9MiB to 100MiB) on several typologies of VMs (`XS: 1GiB RAM, 1 VCPU`, `S: 2GiB RAM, 2 VCPU`, `M: 4GiB RAM, 3 VCPU`, `L: 8GiB RAM, 4 VCPU`).

The result of these tests shows that the `4MiB` chunk size seems to be the best size compared to other chunk sizes (`2Mib`, `4MiB`, `8Mib`, `16Mib`, `32Mib`, `64Mib`, `128Mib`).

below is the average time per chunk size:

<details>

<summary>Detailled data</summary>

<br>

</br>

</details>

Co-authored-by: many <maxime@meilisearch.com>

369: Add test checking the bug reported in meilisearch issue 1716 r=Kerollmops a=ManyTheFish

The bug is not present in the newer milli version.

Related to [Meilisearch#1716](https://github.com/meilisearch/MeiliSearch/issues/1716)

Co-authored-by: many <maxime@meilisearch.com>

366: Geosearch error handling r=Kerollmops a=irevoire

Rewrite most of geosearch error handling and another batch of tests on the criterion parsing.

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: Irevoire <tamo@meilisearch.com>

364: Fix all the benchmarks r=Kerollmops a=irevoire

#324 broke all benchmarks.

I fixed everything and noticed that `cargo check --all` was insufficient to check the bench in multiple workspaces, so I also updated the CI to use `cargo check --workspace --all-targets`.

Co-authored-by: Tamo <tamo@meilisearch.com>

363: Fix the returned `AscDesc` error r=Kerollmops a=irevoire

With my previous PR on the geosearch I erased the change I've introduced with my pre-previous PR about the new error type when we fail to parse the `AscDesc` type.

Sorry for that, here is the fix

Co-authored-by: Tamo <tamo@meilisearch.com>

357: Add benchmarks for the geosearch r=Kerollmops a=irevoire

closes#336

Should I merge this PR in #322 and then we merge everything in `main` or should we wait for #322 to be merged and then merge this one in `main` later?

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: Irevoire <tamo@meilisearch.com>

324: Implement documents API r=Kerollmops a=MarinPostma

This pr implement the intermediary document representation for milli. The JSON, JSONL and CSV formats are replaced with the format instead, to push the serialization duty on the client side.

The `documents` module contains the interface to the new document format:

- The `DocumentsBuilder` allows the creation of a writer backed document addition, when documents are added either one by one, or as arrays of depth 1. This is made possible by the fact that the seriliazer used by the `add_documents` methods only accepts `[Object]` and `Object`. The related serialization logic is located in the `serde.rs` file.

- The `DocumentsReader` allows to to iterate over the documents created by a `DocumentsBuilder`. A call to `next_document_with_index` returns the next obkv reader in the document addition, along with a reference to the index used to map the field ids in the obkv reader to the field names

All references to json, jsonl or csv in the tests have been replaced with the `documents!` macro, works exaclty like the `serde_json::json` macro, as a convenient way to create a `DocumentsReader`.

Rewrote the search cli, to the `cli` crate, to also allow index manipulation. This only offers basic functionalities for now, but is meant to be easier to extend than http ui

blocked by #308

Co-authored-by: mpostma <postma.marin@protonmail.com>

360: Update version for the next release (v0.14.0) r=Kerollmops a=curquiza

Release containing the geosearch, cf #322

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>