2076: fix(dump): Fix the import of dump from the v24 and before r=ManyTheFish a=irevoire

Same as https://github.com/meilisearch/MeiliSearch/pull/2073 but on main this time

Co-authored-by: Irevoire <tamo@meilisearch.com>

2066: bug(http): fix task duration r=MarinPostma a=MarinPostma

`@gmourier` found that the duration in the task view was not computed correctly, this pr fixes it.

`@curquiza,` I let you decide if we need to make a hotfix out of this or wait for the next release. This is not breaking.

Co-authored-by: mpostma <postma.marin@protonmail.com>

2057: fix(dump): Uncompress the dump IN the data.ms r=irevoire a=irevoire

When loading a dump with docker, we had two problems.

After creating a tempdirectory, uncompressing and re-indexing the dump:

1. We try to `move` the new “data.ms” onto the currently present

one. The problem is that if the `data.ms` is a mount point because

that's what peoples do with docker usually. We can't override

a mount point, and thus we were throwing an error.

2. The tempdir is created in `/tmp`, which is usually quite small AND may not

be on the same partition as the `data.ms`. This means when we tried to move

the dump over the `data.ms`, it was also failing because we can't move data

between two partitions.

------------------

1 was fixed by deleting the *content* of the `data.ms` and moving the *content*

of the tempdir *inside* the `data.ms`. If someone tries to create volumes inside

the `data.ms` that's his problem, not ours.

2 was fixed by creating the tempdir *inside* of the `data.ms`. If a user mounted

its `data.ms` on a large partition, there is no reason he could not load a big

dump because his `/tmp` was too small. This solves the issue; now the dump is

extracted and indexed on the same partition the `data.ms` will lay.

fix#1833

Co-authored-by: Tamo <tamo@meilisearch.com>

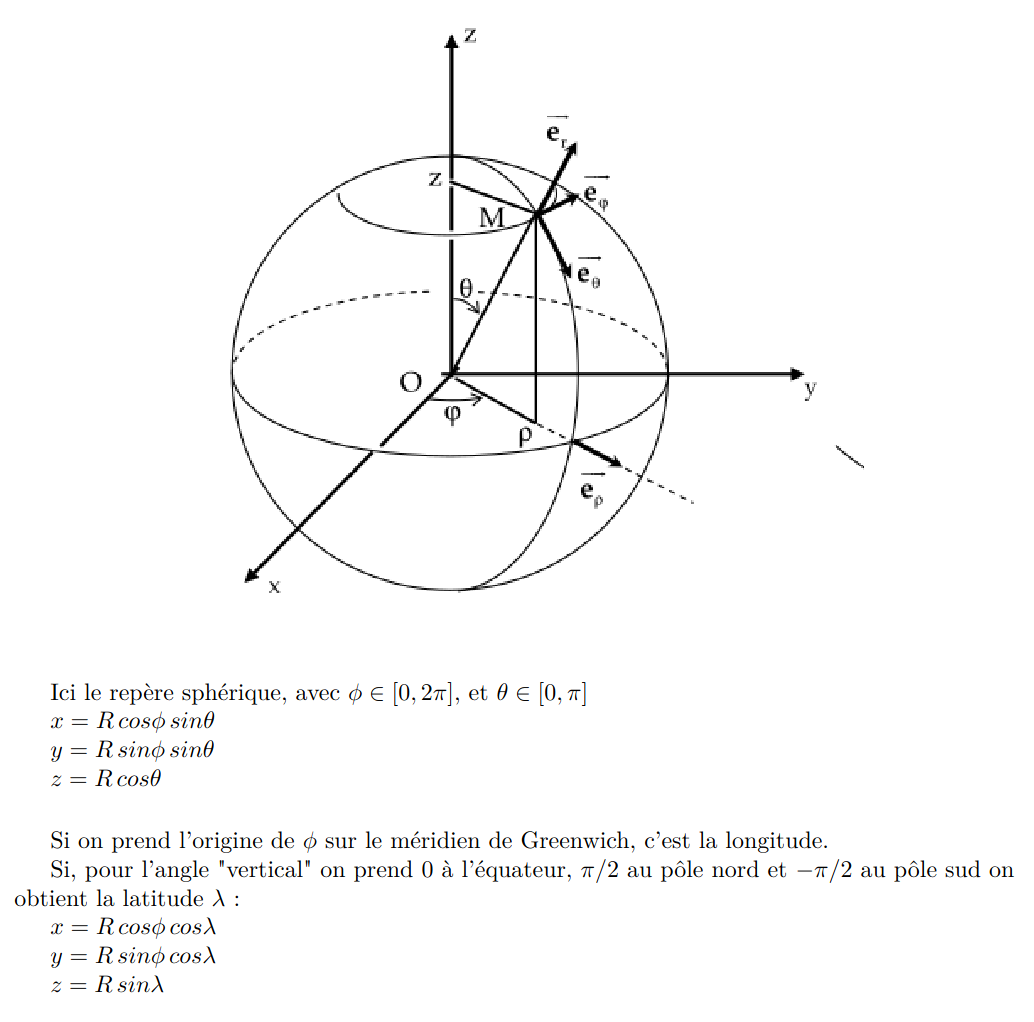

424: Store the geopoint in three dimensions r=Kerollmops a=irevoire

Related to this issue: https://github.com/meilisearch/MeiliSearch/issues/1872

Fix the whole computation of distance for any “geo” operations (sort or filter). Now when you sort points they are returned to you in the right order.

And when you filter on a specific radius you only get points included in the radius.

This PR changes the way we store the geo points in the RTree.

Instead of considering the latitude and longitude as orthogonal coordinates, we convert them to real orthogonal coordinates projected on a sphere with a radius of 1.

This is the conversion formulae.

Which, in rust, translate to this function:

```rust

pub fn lat_lng_to_xyz(coord: &[f64; 2]) -> [f64; 3] {

let [lat, lng] = coord.map(|f| f.to_radians());

let x = lat.cos() * lng.cos();

let y = lat.cos() * lng.sin();

let z = lat.sin();

[x, y, z]

}

```

Storing the points on a sphere is easier / faster to compute than storing the point on an approximation of the real earth shape.

But when we need to compute the distance between two points we still need to use the haversine distance which works with latitude and longitude.

So, to do the fewest search-time computation possible I'm now associating every point with its `DocId` and its lat/lng.

Co-authored-by: Tamo <tamo@meilisearch.com>

2060: chore(all) set rust edition to 2021 r=MarinPostma a=MarinPostma

set the rust edition for the project to 2021

this make the MSRV to v1.56

#2058

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

When loading a dump with docker, we had two problems.

After creating a tempdirectory, uncompressing and re-indexing the dump:

1. We try to `move` the new “data.ms” onto the currently present

one. The problem is that if the `data.ms` is a mount point because

that's what peoples do with docker usually. We can't override

a mount point, and thus we were throwing an error.

2. The tempdir is created in `/tmp`, which is usually quite small AND may not

be on the same partition as the `data.ms`. This means when we tried to move

the dump over the `data.ms`, it was also failing because we can't move data

between two partitions.

==============

1 was fixed by deleting the *content* of the `data.ms` and moving the *content*

of the tempdir *inside* the `data.ms`. If someone tries to create volumes inside

the `data.ms` that's his problem, not ours.

2 was fixed by creating the tempdir *inside* of the `data.ms`. If a user mounted

its `data.ms` on a large partition, there is no reason he could not load a big

dump because his `/tmp` was too small. This solves the issue; now the dump is

extracted and indexed on the same partition the `data.ms` will lay.

fix#1833

2059: change indexed doc count on error r=irevoire a=MarinPostma

change `indexed_documents` and `deleted_documents` to return 0 instead of null when empty when the task has failed.

close#2053

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

2056: Allow any header for CORS r=curquiza a=curquiza

Bug fix: trigger a CORS error when trying to send the `User-Agent` header via the browser

`@bidoubiwa` thanks for the bug report!

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

429: Benchmark CIs: not use a default label to call the GH runner r=irevoire a=curquiza

Since we now have multiple self-hosted github runners, we need to differentiate them calling them in the CI. The `self-hosted` label is the default one, so we need to use the unique and appropriate one for the benchmark machine

<img width="925" alt="Capture d’écran 2022-01-04 à 15 42 18" src="https://user-images.githubusercontent.com/20380692/148079840-49cd7878-5912-46ff-8ab8-bf646777f782.png">

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

428: Reintroduce the gitignore for the fuzzer r=Kerollmops a=irevoire

Reintroduce the gitignore in the fuzz directory

Co-authored-by: Tamo <tamo@meilisearch.com>

425: Push the result of the benchmarks to influxdb r=irevoire a=irevoire

Now execute a benchmark for every PR merged into main and then upload the results to influxdb.

Co-authored-by: Tamo <tamo@meilisearch.com>

2011: bug(lib): drop env on last use r=curquiza a=MarinPostma

fixes the `too many open files` error when running tests by closing the

environment on last drop

To check that we are actually the last owner of the `env` we plan to drop, I have wrapped all envs in `Arc`, and check that we have the last reference to it.

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

2036: chore(ci): Enable rust_backtrace in the ci r=curquiza a=irevoire

This should help us to understand unreproducible panics that happens in the CI all the time

Co-authored-by: Tamo <tamo@meilisearch.com>

2035: Use self hosted GitHub runner r=curquiza a=curquiza

Checked with `@tpayet,` we have created a self hosted github runner to save time when pushing the docker images.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>